Letting the browser speak — the Web Speech API

Letting the browser speak — the Web Speech API

In my spare time I’ve been learning Chinese and I’ve been making a flashcard application. At Smashing Conference San Francisco a few weeks ago, I met a few people doing the same. It seems like flash card apps are the new version of the “todo” app engineers like to make when wanting to learn new technology.

“making your own cards and personalizing them will greatly increase your ability to remember new material and learn things that are important specifically to you.”

I’m designing my app around the idea of spaced repetition and the fact I’m making the app and making the flashcards my own is helping me learn the things that are important to me. As developers, I feel we should always be looking to combine our non-technical goals with our technical goals. The magic is where two interests/passions unite!

I’ve been showing digital flashcards of Simplified Chinese characters and their corresponding English translations and Hanyu Pinyin to help me learn how to say them and it seems to be helping me remember them. However, I felt a voice was missing — I wanted a way to hear all the words that would support auditory learning.

How to support auditory learning with the web

I looked at what others are doing — and most websites/apps are using MP3s or not using them at all. The website Duolingo for instance appears to have sound in its app but not in its website. I’ve been trying to keep my app package size down as much as possible with the exception of the data — my goal with this app is for it to work offline. The idea of lots of MP3s for all the possible sounds worried me a little in terms of download size as well as needing to find and source the recordings!

So, I looked to the browsers which always seem to have thought of these things before me and I found the Web Speech API.

As expected, browser support was quite low. Firefox and Chrome support with a bit of Safari sprinkled in and it was very experimental. I’m all for progressive enhancement, so it wasn’t the end of the world, if my app only played sound in newer browsers so I had a play with it.

It was pretty straightforward to use.

const word = '音乐';

// Feature detect

if (

window.speechSynthesis &&

typeof SpeechSynthesisUtterance !== undefined

) {

const synth = window.speechSynthesis;

// get all the voices available on your browser

const voices = synth.getVoices();

// find a voice that can speak chinese

const voice = voices.

filter((voice) => voice.lang.indexOf('zh') === 0)[0];

// make the browser speak!

const utterThis = new SpeechSynthesisUtterance(word);

utterThis.voice = voice;

synth.speak(utterThis);

}

While this worked wonderfully on my desktop browser, when I loaded it on to mobile, I hit a snag — the flash card game did not play any of the lovely sounds it did on my desktop.

Troubleshooting missing voices on Android

I was confident in my feature detection, so I was a little perplexed to what was going on here. I dug a little deeper and realised that my mobile browser didn’t have a voice that could speak Chinese. I improved my code:

// find a voice that can speak chinese

const voice = voices.

filter((voice) => voice.lang.indexOf('zh') === 0)[0];

// make the browser speak if it can!

if ( voice ) {

const utterThis = new SpeechSynthesisUtterance(word);

utterThis.voice = voice;

synth.speak(utterThis);

} else {

const utterThis = new SpeechSynthesisUtterance(word);

utterThis.voice = voices[0];

synth.speak('I\'m afraid I cannot speak Mandarin yet.');

}

I found this useful webpage for detecting what voices were available on a device:

https://mdn.github.io/web-speech-api/speak-easy-synthesis/

According to this page, my mobile device only had English and Spanish installed. Typically my phone is not running in Chinese and I’m not viewing Chinese websites and didn’t realise I needed this support until right now and for this specific app, but my app did want it and it had no way to communicate that to me other than being broken.

Installing language support

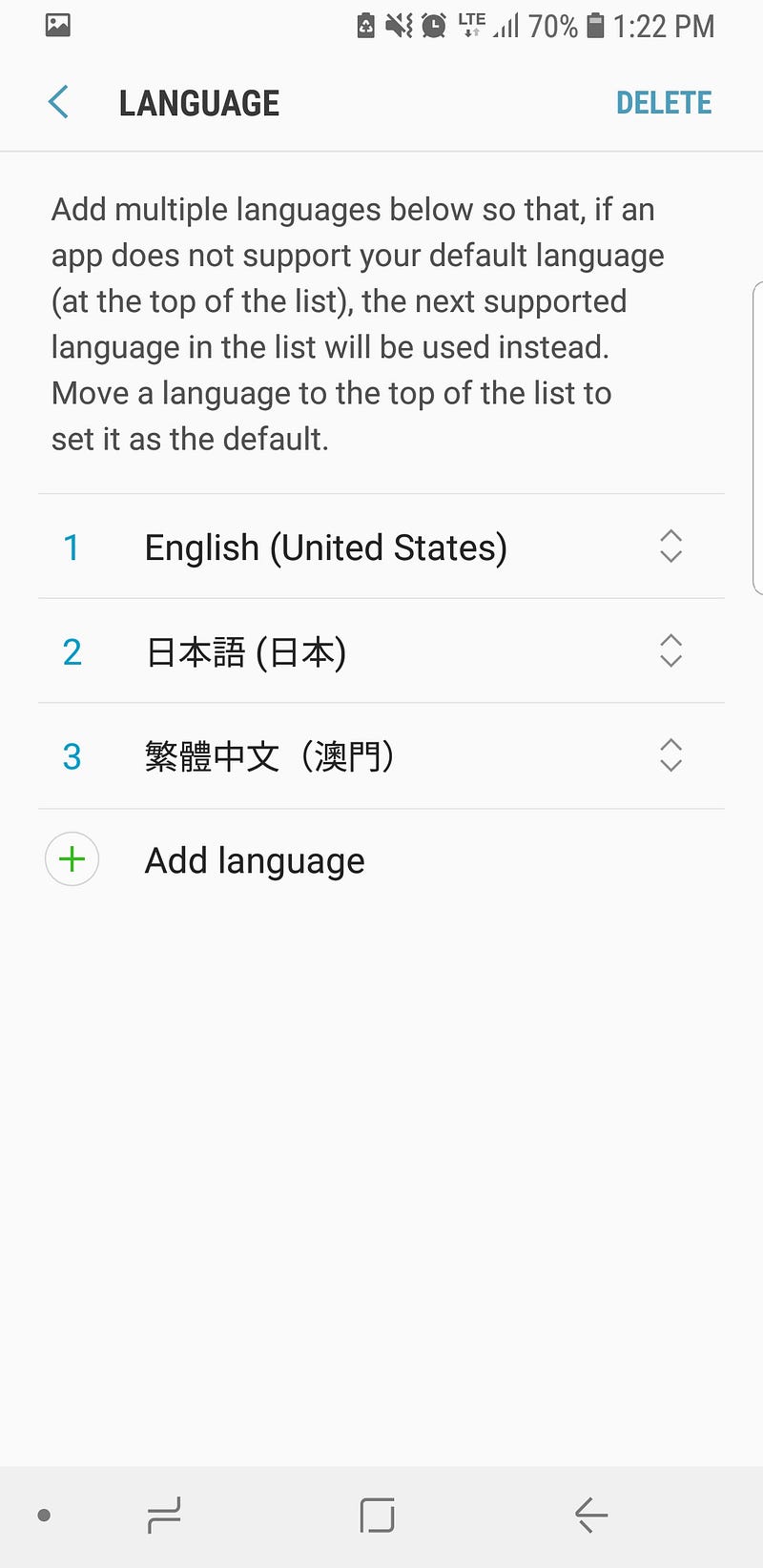

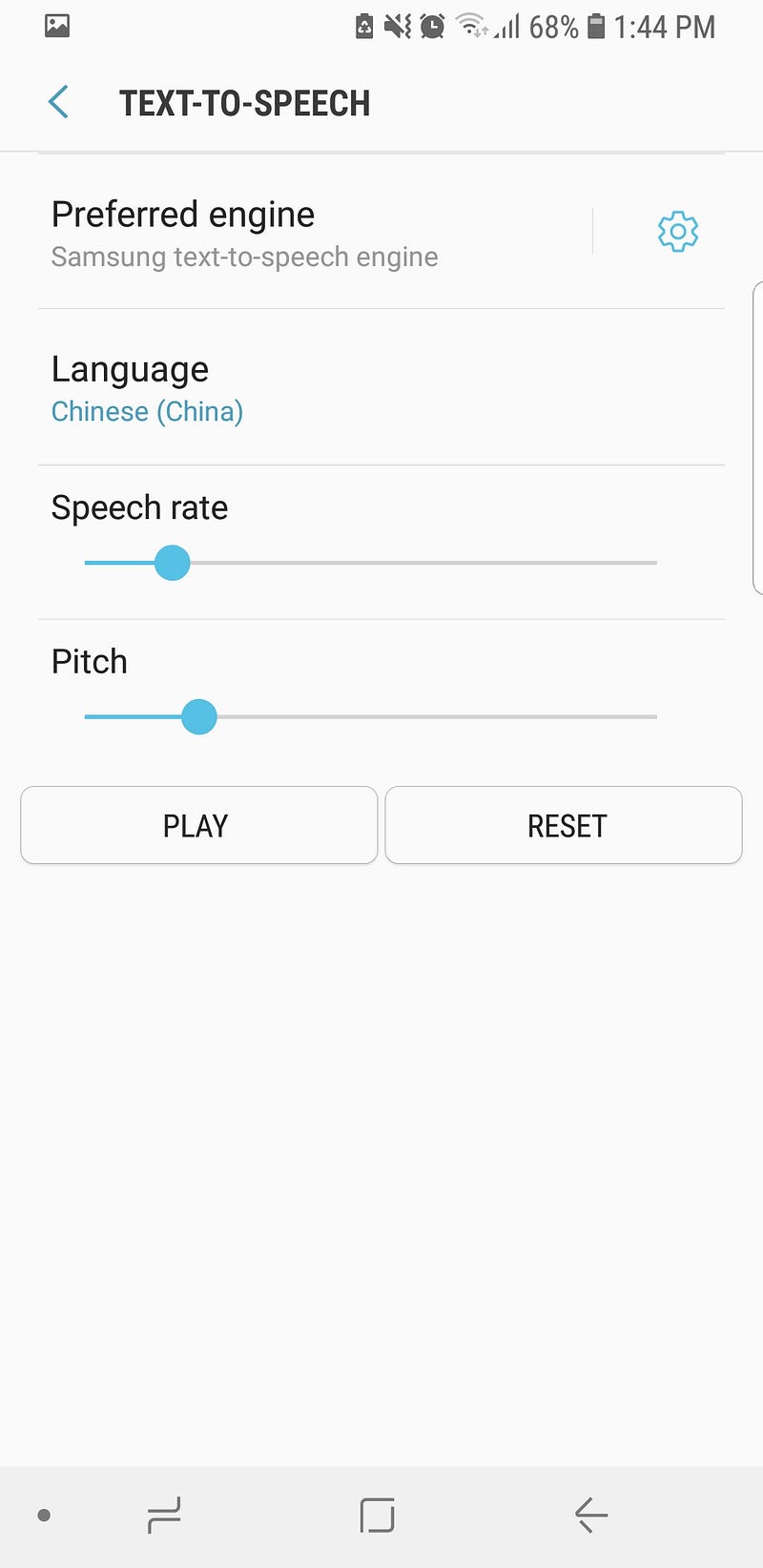

Installing Chinese was a cumbersome process. I had to fiddle with my Android settings and add the Chinese language and restart my browser.

While I or another developer could do this, it wasn’t a great experience, if I wanted to share my app with a non-technical audience, there would be no easy way for them to do this, without having to send them detailed instructions. This made me sad.

I am reminded of the Nespresso coffee machine in our office which has functional buttons that go red when it has a common problem, that need the manual for deciphering. Its interface confuses everyone around it, who know it is broken but do not know how to fix it.

A mark of a fully thought out API is one that has thought about every step of the process. This is an experimental API so this wasn’t really a suprise.

Imagining the API I’d like

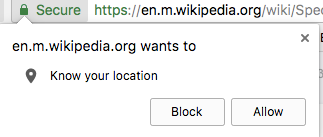

This annoyed me a little. The capability was there, but it hadn’t been prioritised. What I really wanted was an API that would allow me to request a language and take care of the installation if necessary in a similar way to how I request permission for the user to use their current location or to send them push notifications.

If my user didn’t have a Chinese language installed, this script could prompt them to add support for it. They could say yes, and now they would hear the words spoken to them. If they said no, I would be able to let them know what they’re missing out on.

I imagine that would look like this:

window.speechSynthesis.getVoicesForLanguage('zh').then(()=> {

speakMandarin();

}, () => {

notifyUserSoundIsDisabled();

});

Conclusions

The WebSpeech API is pretty interesting. It promises voice detection and text to speech, but like most APIs that are experimental and still on the standards track, it has some areas for improvement.

Like many things built by engineers, the places I feel it really needs polish are around connecting developers to end users in such a way that we can create smooth beautiful experiences.

Given the API’s conception dates back to 2012 and landed in Chrome in 2014, I worry none of this will happen any time soon, but it seems like it could play an important role in the web and provide a powerful open alternative to native apps when it does.

Hopefully my app will be able to make use of it when it finally does.

Further reading

If the API interests you, I found the following links useful: